Feedback Request for Curated Content Views

30 April 2013 • by Bob • IIS, Content

Publishing technical documentation is an interesting business, and a lot of discussion & deliberation goes into the creation process for articles and videos that we produce at Microsoft. For example, when I am writing an article for IIS, should I publish that on www.iis.net, or technet.microsoft.com, or msdn.microsoft.com? Or should I just write a blog about it? And after I have published an article, how will my intended audience find it? As we continue to publish hundreds of technical articles to the websites that I just mentioned, the navigation hierarchy becomes increasingly complex, and content discoverability suffers.

Some time ago a few of our writers began to experiment with a new way to consolidate lists of related content into something that we called a "Content Map." The following pages will show you an example of what the Content Map concept looks like:

Each of these articles received a great deal of positive feedback from customers, but our team wanted to see if there was a way that customers could help us to improve on this design. We know that there is a great deal of third-party content on the Internet, and we wanted a way to recognize that. We also asked several customers about what kinds of content they need to be successful, and we added their suggestions to our deliberation process.

As a result of our collective discussions, we came up with an idea for what we are internally calling "Curated Content Views." These "views" are lists of related content topics that are organized to answer a particular question or customer need. A view is assembled by someone at Microsoft based on input from anyone who thinks that an article, blog, video, or code sample might be beneficial as part of the view.

With that in mind, here are three conceptual content views that a few of the writers on our content team have assembled:

- How to deploy an ASP.NET web application to a Windows Azure Web Site using Visual Studio

- How to secure an ASP.NET MVC app

- How Do I Configure FTP Security in IIS?

Our team is requesting feedback from members of the community regarding these conceptual views with regard to the level of detail that is included in each view, the conceptual layouts that were used, and any thoughts about how this content compares with existing table of contents topics or content maps. You can reply to our content team via email, or you can post a response to this blog.

While we are interested in any feedback you may have, our team has put together the following list of specific questions to think about:

- Each curated view/content map includes a list of suggested content links. Below is a list of additional information that could be provided with each link. Which of these are most important?

- Date that the content was posted.

- Type of content (video, article, code sample, etc.).

- Author name.

- Short description.

- Level of difficulty of the content.

- Version of software/framework or SDK the content refers to.

- Website the content appears on.

- Number of likes or positive reviews.

- Rating assigned to the content by the community.

- If you opened a page similar to one of these curated views/content maps from Google or Bing search results, would you be likely to try the links on this page or just return to search results?

- If Microsoft and community experts published a large set of content views similar to these on a website, would you visit that site first when you had technical questions, or would you do an Internet search on Google/Bing first?

- Do the questions addressed by each curated view seem too narrow or too broad in scope to be helpful? If so, which ones?

- Do any of the curated views/content maps provide too much or too little detail for each link in the list? If so, which ones?

- Do you find it helpful to see the profile of the person who created the curated view/content map?

- If we provided an easy way for you to publish your own curated views (with attribution) to a common site together with the Microsoft-created curated views, would you be interested in doing so? Why or why not?

- If we provided an easy way for you to suggest new content items to add to content views/content maps that have already been published, would you be interested in doing so? Why or why not?

- What would make these content views/content maps more helpful?

Thanks!

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

IIS 6.0 WebDAV and Compound Document Format Files Revisited with Workarounds

24 April 2013 • by Bob • IIS, WebDAV, IIS 6

A few years ago I wrote the following blog, wherein I described how the WebDAV functionality in IIS 6.0 worked with files that are Compound Document format:

IIS 6.0 WebDAV and Compound Document Format Files

As I explained in that blog post, WebDAV needs somewhere to store "properties" for files that are uploaded to the server, and WebDAV uses the compound document format to accomplish this according to the following implementation logic:

- If the file is already in the compound document file format, IIS simply adds the WebDAV properties to the existing file. This data will not be used by the application that created the file - it will only be used by WebDAV. However, the file size will increase because WebDAV properties are added to the compound document.

- For other files, WebDAV stores a compound document in an NTFS alternate data stream that is attached to the file. You will never see this additional data from any directory listing, and the file size doesn't change because it's in an alternate data stream.

I recently had a customer contact me in order to ask if there was a way to disable this functionality since he didn't want his files modified in order to store the WebDAV properties. Unfortunately there is no built-in option for IIS that will disable this functionality, but there are a few workarounds.

Workaround #1 - Change the File Type

First and foremost - you can change your file type to something other than the compound document format. For example, if you are uploading files that were created in Microsoft Office, if you can upload your files in the newer Office Open XML formats, then you will not run into this problem. By way of explanation, older Microsoft Office files are in compound document format, whereas files that are that are created with Microsoft Office 2010 and later are in a zipped, XML-based file format. These files will have extensions like *.DOCX for Microsoft Word documents, *.XLSX for Microsoft Excel spreadsheets, and *.PPTX for Microsoft PowerPoint presentations.

Workaround #2 - Wrap Compound Document Files in a Separate File Type

If you are using a file that must be in compound document format, like a setup package in Microsoft Installer (*.MSI) format, you can upload the file in a *.ZIP file, or you can wrap the setup package inside a self-extracting executable by using a technology like Microsoft's IExpress Wizard (which ships as a built-in utility with most versions of Windows).

Workaround #3 - Block WebDAV Properties

If you absolutely cannot change your document from compound document format, I have a completely unsupported workaround that I can suggest. Since the problem arises when properties are added to a file, you can find a way to intercept the WebDAV commands that try to set properties. The actual HTTP verb that is used is PROPPATCH, so if you can find a way to keep this command from being used, then you can prevent files from being modified. Unfortunately you cannot simply suppress PROPPATCH commands by using a security tool like Microsoft's UrlScan to block the command, because this will cause many WebDAV clients to fail.

Instead, what I did as a workaround was to write an example ISAPI filter for IIS 6.0 that intercepts incoming PROPPATCH commands and always sends a successful (e.g. "200 OK") response to the WebDAV client, but in reality the filter does nothing with the properties and ends the request processing. This tricks a WebDAV client into thinking that it succeeded, and it prevents your files in compound document format from being modified. However, this also means that no WebDAV properties will ever be stored with your files; but if that's acceptable to you, (and it usually should be), then you can use this workaround.

With that in mind, here's the C++ code for my example ISAPI filter, and please remember that this is a completely unsupported workaround that is intended for use only when you cannot repackage your files to use something other than the compound document format.

#define _WIN32_WINNT 0x0400 #include <windows.h> #include <httpfilt.h> #define STRSAFE_LIB #include <strsafe.h> #define BUFFER_SIZE 2048 const char xmlpart1[] = "<?xml version=\"1.0\"?>" "<a:multistatus xmlns:a=\"DAV:\">" "<a:response>" "<a:href>"; const char xmlpart2[] = "</a:href>" "<a:propstat>" "<a:status>HTTP/1.1 200 OK</a:status>" "</a:propstat>" "</a:response>" "</a:multistatus>"; BOOL WINAPI GetFilterVersion(PHTTP_FILTER_VERSION pVer) { HRESULT hr = S_OK; // Set the filter's version. pVer->dwFilterVersion = HTTP_FILTER_REVISION; // Set the filter's description. hr = StringCchCopyEx( pVer->lpszFilterDesc,256,"PROPPATCH", NULL,NULL,STRSAFE_IGNORE_NULLS); if (FAILED(hr)) return FALSE; // Set the filter's flags. pVer->dwFlags = SF_NOTIFY_ORDER_HIGH | SF_NOTIFY_PREPROC_HEADERS; return TRUE; } DWORD WINAPI HttpFilterProc( PHTTP_FILTER_CONTEXT pfc, DWORD NotificationType, LPVOID pvNotification ) { // Verify the correct notification. if ( NotificationType == SF_NOTIFY_PREPROC_HEADERS) { PHTTP_FILTER_PREPROC_HEADERS pHeaders; HRESULT hr = S_OK; bool fSecure = false; char szServerName[BUFFER_SIZE] = ""; char szSecure[2] = ""; char szResponseXML[BUFFER_SIZE] = ""; char szResponseURL[BUFFER_SIZE] = ""; char szRequestURL[BUFFER_SIZE] = ""; char szMethod[BUFFER_SIZE] = ""; DWORD dwBuffSize = 0; pHeaders = (PHTTP_FILTER_PREPROC_HEADERS) pvNotification; // Get the method of the request dwBuffSize = BUFFER_SIZE-1; // Exit with an error status if a failure occured. if (!pfc->GetServerVariable( pfc, "HTTP_METHOD", szMethod, &dwBuffSize)) return SF_STATUS_REQ_ERROR; if (strcmp(szMethod, "PROPPATCH") == 0) { // Send the HTTP status to the client. if (!pfc->ServerSupportFunction( pfc, SF_REQ_SEND_RESPONSE_HEADER,"207 Multi-Status", 0, 0)) return SF_STATUS_REQ_ERROR; // Get the URL of the request. dwBuffSize = BUFFER_SIZE-1; if (!pfc->GetServerVariable( pfc, "URL", szRequestURL, &dwBuffSize)) return SF_STATUS_REQ_ERROR; // Determine if request was sent over secure port. dwBuffSize = 2; if (!pfc->GetServerVariable( pfc, "SERVER_PORT_SECURE", szSecure, &dwBuffSize)) return SF_STATUS_REQ_ERROR; fSecure = (szSecure[0] == '1'); // Get the server name. dwBuffSize = BUFFER_SIZE-1; if (!pfc->GetServerVariable( pfc, "SERVER_NAME", szServerName, &dwBuffSize)) return SF_STATUS_REQ_ERROR; // Set the response URL. hr = StringCchPrintf( szResponseURL,BUFFER_SIZE-1, "http%s://%s/%s", (fSecure ? "s" : ""), szServerName, &szRequestURL[1]); // Exit with an error status if a failure occurs. if (FAILED(hr)) return SF_STATUS_REQ_ERROR; // Set the response body. hr = StringCchPrintf( szResponseXML,BUFFER_SIZE-1, "%s%s%s", xmlpart1, szResponseURL, xmlpart2); // Exit with an error status if a failure occurs. if (FAILED(hr)) return SF_STATUS_REQ_ERROR; // Write the response body to the client. dwBuffSize = strlen(szResponseXML); if (!pfc->WriteClient( pfc, szResponseXML, &dwBuffSize, 0)) return SF_STATUS_REQ_ERROR; // Flag the request as completed. return SF_STATUS_REQ_FINISHED; } } return SF_STATUS_REQ_NEXT_NOTIFICATION; }

I hope this helps. ;-]

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

A=432Hz Tuning versus A=440Hz Tuning

22 April 2013 • by Bob • Guitar, Music

A coworker recently pointed me to the following blog post, and he asked if it had any basis in reality: 432Hz: Crazy Theory Or Crazy Fact. After looking at that blog, I think a better title for it would be "432Hz: Misinterpreted Theory and Misconstrued Facts." I honestly mean no disrespect to the author by my suggestion; but the blog's author clearly does not understand the theory behind what he is discussing. And because he misunderstands some basic concepts, his discussion on this subject offers little by way of practical information. As such, I thought that I would set the record straight on a few things and offer some useful information on the subject.

First of all, the author's suggestion that using A=432Hz for a reference when tuning will put your guitar in Pythagorean Tuning is completely false; all you are doing is changing the base frequency that you are using, but your guitar will still be in Standard Tuning.

Discussing the base frequency is about as effective as discussing the merits of an E-Flat Tuning versus Standard-E Tuning; either one is fine, and it just comes down to user preference as to which one is better. The same thing holds true for choosing A=432Hz over A=440Hz - it's a preference choice. (Unless you have Perfect Pitch, in which case A=432Hz is probably going to annoy you more than words can say.)

However, there is one major difference: if you choose to record music by using an other-than-normal base frequency, you'll frustrate the heck out of someone who just tuned their guitar with a standard tuner and attempts to sit down and learn your music. ("Hmm... this just doesn't sound right.") And you could retune just to annoy them for fun, of course. ;-]

That being said, any discussion of Pythagorean Tuning and the guitar is utterly useless, because a guitar is not fretted for Pythagorean Tuning. Here is where the real confusion lies, because the author of that blog is confusing changing the base frequency with somehow putting the guitar into a different temperament, which is not possible without re-fretting your instrument. Here's what I mean by that:

The physical interval between the frets on a guitar neck is based on Equal Temperament, which is a constant that is defined as the 12th root of 2. In Microsoft Excel that formula would be 10^(LOG(2)/12), which comes to 1.0594630944. We all know that an octave is double the frequency of the base pitch, so with A=440Hz you would get A=880Hz for the next higher octave. By using the above constant, you can create the following scale from an A to an A in the next higher octave by multiplying each frequency in the scale by the constant in order to derive the resultant frequency for each successive note:

Note | Frequency |

|

|---|---|---|

A |

= |

440.00Hz |

Bb |

= |

466.16Hz |

B |

= |

493.88Hz |

C |

= |

523.25Hz |

C# |

= |

554.37Hz |

D |

= |

587.33Hz |

D# |

= |

622.25Hz |

E |

= |

659.26Hz |

F |

= |

698.46Hz |

F# |

= |

739.99Hz |

G |

= |

783.99Hz |

Ab |

= |

830.61Hz |

A |

= |

880.00Hz |

In contrast to the claims that were made by the blog's author, you do not magically get whole-number frequencies (e.g. with no decimal points) if you change the base frequency to A=432Hz; the math just doesn't support that. Here is the list of resulting frequencies for an octave if you start with a base frequency of A=432Hz, and I have included a comparison with a base frequency of A=440Hz:

Note | Frequency 1 | Frequency 2 |

||

|---|---|---|---|---|

A |

= |

432.00Hz |

<-> |

440.00Hz |

Bb |

= |

457.69Hz |

<-> |

466.16Hz |

B |

= |

484.90Hz |

<-> |

493.88Hz |

C |

= |

513.74Hz |

<-> |

523.25Hz |

C# |

= |

544.29Hz |

<-> |

554.37Hz |

D |

= |

576.65Hz |

<-> |

587.33Hz |

D# |

= |

610.94Hz |

<-> |

622.25Hz |

E |

= |

647.27Hz |

<-> |

659.26Hz |

F |

= |

685.76Hz |

<-> |

698.46Hz |

F# |

= |

726.53Hz |

<-> |

739.99Hz |

G |

= |

769.74Hz |

<-> |

783.99Hz |

Ab |

= |

815.51Hz |

<-> |

830.61Hz |

A |

= |

864.00Hz |

<-> |

880.00Hz |

When you look at the two sets of frequencies side-by-side, you see that tuning with either base frequency yields only two even frequencies - one for each of the A notes. However, when you use the standard A=440Hz tuning, you have two frequencies (the F# and G) that almost fall on even frequencies (at 739.99Hz and 783.99Hz respectively). Not that this really matters - your ear is not going to care whether a frequency falls on an even number. (Although it might look nice on paper if you have Obsessive Compulsive Disorder and you rounded every frequency to the nearest whole number.)

Since the frets on the guitar are based on this temperament, that's all you get - period. You can fudge your base frequency up or down all you want, but in the end you're still going to be using Equal Temperament, unless you completely re-fret your guitar as I already mentioned. (Note: See the FreeNotes website for guitar necks that are fretted for alternate temperaments.)

If you had a background that included synthesizers, (and as a guitar player I must apologize for my side hobby on keyboards), you might remember that back in the 1980s there was a passing phase with microtonality on keyboards. If you had a keyboard that supported this technology, you were able to play your keyboard by using intonation that was different than the Equal Temperament, which was sometimes pretty cool.

Why would someone want to do this? Because many of the old composers never used Equal Temperament; that's a fairly recent invention. So if you want to hear what a piece of piano music sounded like for the original composer, you might want to set up your keyboard to use the same microtonality temperament that the composer actually used.

But that being said, before the invention of Equal Temperament, there were several competing temperaments, and each was usually based on tuning some interval like the fourth or fifth by ear, and then finding intervals in-between those other intervals that sounded acceptable. What this resulted in, however, were a plethora of tunings/temperaments that sounded great in some keys and terrible in others. More than that, if you continue to work your way up a scale based on intervals based on sound, you will eventually introduce errors. Using the actual Pythagorean Tuning suffers from this problem, so if you put a microtonal keyboard into Pythagorean Tuning and attempted to play a piece of music that extended past a couple of octaves, it sounded terrible. (See Pythagorean Tuning for an explanation.)

But on that note, almost every guitarist suffers from this same problem, but you just don't know it. Have you ever tuned your guitar by using the 5th fret and 7th frets harmonics? Of course you have, and so have I. But here's a side point that most guitarists don't know - when you tune your guitar by using those harmonics, you slowly introduce errors across the guitar, and as a result it will seldom seem completely in tune with itself.

Here's an excerpt from a write-up that I did for the Christian Guitar website a while ago that describes what I mean:

There have been many different temperaments used in the Western Hemisphere, and many of these centered around specific intervals. For example, start with a C note, then find the perfect octave above; you now have the starting and ending points for your scale. Next, find the harmonically perfect 5th of G by tuning and listening to pitches, then use these intervals to find E, which is the major 3rd. Once done, you now have three notes of your scale and the octave. If you jump up to G and use the same process to find the 3rd and 5th, you get the B and D notes. If you keep repeating the process, you eventually derive all of the diatonic notes for your major scale. On a piano that would be just the white keys.

Leaving sharps and flats out of this example, (the piano's black keys), the problem is that if you keep using the perfect 5th for a reference, you gradually find that the notes in your scale are not lining up as you travel around the circle of 5ths. This occurs because using perfect 5ths will eventually introduce slight errors on other intervals, and the result will be that your scale works great in one or two keys, but other keys sound noticeably awful.

Here's why this happens: after having gone around the entire circle using perfect 5ths as a tuning guide, by the time you get to the octave above your starting note, the physical frequency for the octave is not the same as the last pitch that you derived from tuning based on the perfect 5ths. This is especially problematic when you use one particular note/key to tune an instrument, and then try to play in another key. For example, if you tune an instrument using perfect 5ths and start on a C note, the key of C# will sound distinctively out-of-tune.

The only trouble that some people might have with equal-temperament is that the intervals within the octave are not based on perfect intervals, but rather intervals based on the constant. This causes a lot of problems with people who tune by ear using perfect 5ths, which many guitarists do without realizing when they tune their guitars using harmonics over the 7th fret.

For example, if you were to tune an E note using an A note as a reference point, your ear would want to hear the perfect 5th for E which is 660.00Hz, not the equal-tempered E that is 659.26Hz. Although the difference is very small, it is compounded over time as you tune the other notes within the scale. If you continued to tune using 5ths, your next note higher would be the B that is a 5th over E. Your ear would want to hear the perfect 5th again, so you would wind up with 990.00Hz for B instead of the equal-tempered 987.77Hz. Another perfect 5th would be 1485Hz instead of the equal-tempered 1479.98Hz, then 2227.50Hz instead of 2217.46Hz, etc.

I personally find the math part of music fascinating, and I've obviously spent a bunch of time (perhaps too much time ;-]) studying notes, scales and tunings from a mathematical perspective. Because of that, I view the whole guitar neck as a numerical system and all chords/scales as algorithms. I know that's really geeky, but it's still pretty cool. In the end, I think that math might be my 2nd-favorite part of music. (My favorite part is turning the amps up to 11 and feeling the actual notes as they tangibly pass through my body - it's like a physical feedback loop. Very cool...)

The net result of this discussion is - use a tuner when you are tuning your guitar, not your ear. And it doesn't matter what your base frequency is when you are tuning your guitar - you are still using Equal Temperament because that's the way that your guitar is made. ;-]

Adding Custom FTP Providers with the IIS Configuration Editor - Part 1

31 March 2013 • by Bob • Extensibility, FTP, IIS

I've written a lot of walkthroughs and blog posts about creating custom FTP providers over the past several years, and I usually include instructions for adding these custom providers to IIS. When you create a custom FTP authentication provider, IIS has a user interface for adding that provider to FTP. But if you are adding a custom home directory or logging provider, there is no dedicated user interface for adding those types of FTP providers. In addition, if you create a custom FTP provider that requires settings that are stored in your IIS configuration, there is no user interface to add or manage those settings.

With this in mind, I include instructions in my blogs and walkthroughs that describe how to add those type of providers by using AppCmd.exe from a command line. For example, if you take a look at my How to Use Managed Code (C#) to Create an FTP Authentication and Authorization Provider using an XML Database walkthrough, I include the following instructions:

Adding the Provider

- Determine the assembly information for the extensibility provider:

- In Windows Explorer, open your "C:\Windows\assembly" path, where C: is your operating system drive.

- Locate the FtpXmlAuthorization assembly.

- Right-click the assembly, and then click Properties.

- Copy the Culture value; for example: Neutral.

- Copy the Version number; for example: 1.0.0.0.

- Copy the Public Key Token value; for example: 426f62526f636b73.

- Click Cancel.

- Using the information from the previous steps, add the extensibility provider to the global list of FTP providers and configure the options for the provider:

- At the moment there is no user interface that enables you to add properties for custom authentication or authorization modules, so you will have to use the following command line:

cd %SystemRoot%\System32\Inetsrv

appcmd.exe set config -section:system.ftpServer/providerDefinitions /+"[name='FtpXmlAuthorization',type='FtpXmlAuthorization,FtpXmlAuthorization,version=1.0.0.0,Culture=neutral,PublicKeyToken=426f62526f636b73']" /commit:apphost

appcmd.exe set config -section:system.ftpServer/providerDefinitions /+"activation.[name='FtpXmlAuthorization']" /commit:apphost

appcmd.exe set config -section:system.ftpServer/providerDefinitions /+"activation.[name='FtpXmlAuthorization'].[key='xmlFileName',value='C:\Inetpub\XmlSample\Users.xml']" /commit:apphost - Note: The file path that you specify in the xmlFileName attribute must match the path where you saved the "Users.xml" file on your computer in the earlier in this walkthrough.

- At the moment there is no user interface that enables you to add properties for custom authentication or authorization modules, so you will have to use the following command line:

This example adds a custom FTP provider, and then it adds a custom setting for that provider that is stored in your IIS configuration settings.

That being said, there is actually a way to add custom FTP providers with settings like the ones that I have just described through the IIS interface by using the IIS Configuration Editor. This feature was first available through the IIS Administration Pack for IIS 7.0, and is built-in for IIS 7.5 and IIS 8.0.

Before I continue, if would probably be prudent to take a look at the settings that we are trying to add, because these settings might help you to understand the rest of steps in this blog. Here is an example from my applicationhost.config file for three custom FTP authentication providers; the first two providers are installed with the FTP service, and the third provider is a custom provider that I created with a single provider-specific configuration setting:

<system.ftpServer>

<providerDefinitions>

<add name="IisManagerAuth" type="Microsoft.Web.FtpServer.Security.IisManagerAuthenticationProvider, Microsoft.Web.FtpServer, version=7.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" />

<add name="AspNetAuth" type="Microsoft.Web.FtpServer.Security.AspNetFtpMembershipProvider, Microsoft.Web.FtpServer, version=7.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" />

<add name="FtpXmlAuthorization" type="FtpXmlAuthorization, FtpXmlAuthorization, version=1.0.0.0, Culture=neutral, PublicKeyToken=426f62526f636b73" />

<activation>

<providerData name="FtpXmlAuthorization">

<add key="xmlFileName" value="C:\inetpub\FtpUsers\Users.xml" />

</providerData>

</activation>

</providerDefinitions>

</system.ftpServer>

With that in mind, in part 1 of this blog series, I will show you how to use the IIS Configuration Editor to add a custom FTP provider with provider-specific configuration settings.

Step 1 - Open the IIS Manager and click on the Configuration Editor at feature the server level:

Step 2 - Click the Section drop-down menu, expand the the system.ftpServer collection, and then highlight the providerDefinitions node:

Step 3 - A default installation IIS with the FTP service should show a Count of 2 providers in the Collection row, and no settings in the activation row:

Step 4 - If you click on the Collection row, an ellipsis [...] will appear, and when you click that, IIS will display the Collection Editor dialog for FTP providers. By default you should see just the two built-in providers for the IisManagerAuth and AspNetAuth providers:

Step 5 - When you click Add in the Actions pane, you can enter the registration information for your provider. At a minimum you must provide a name for your provider, but you will need to enter either the clsid for a COM-based provider or the type for a managed-code provider:

Step 6 - When you close the Collection Editor dialog, the Count of providers in the Collection should now reflect the provider that we just added; click Apply in the Actions pane to save the changes:

Step 7 - If you click on the activation row, an ellipsis [...] will appear, and when you click that, IIS will display the Collection Editor dialog for provider data; this is where you will enter provider-specific settings. When you click Add in the Actions pane, you must specify the name for your provider's settings, and this name must match the exact name that you provided in Step 5 earlier:

Step 8 - If you click on the Collection row, an ellipsis [...] will appear, and when you click that, IIS will display the Collection Editor dialog for the activation data for an FTP provider. At a minimum you must provide a key for your provider, which will depend on the settings that your provider expects to retrieve from your configuration settings. (For example, in the XML file that I provided earlier, my FtpXmlAuthorization provider expects to retrieve the path to an XML that contains a list of users, roles, and authorization rules.) You also need to enter the either the value or encryptedValue for your provider; although you can specify either setting, should generally specify the value when the settings are not sensitive in nature, and specify the encryptedValue for settings like usernames and passwords:

Step 9 - When you close the Collection Editor dialog for the activation data, the Count of key/value pairs in the Collection should now reflect the value that we just added:

Step 10 - When you close the Collection Editor dialog for the provider data, the Count of provider data settings in the activation row should now reflect the custom settings that we just added; click Apply in the Actions pane to save the changes:

That's all that there is to adding a custom FTP provider with provider-specific settings; I admit that it might seem like a lot of steps until you get the hang of it.

In the next blog for this series, I will show you how to add custom providers to FTP sites by using the IIS Configuration Editor.

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

Automating the Creation of FTP User Isolation Folders

28 March 2013 • by Bob • IIS, Scripting, FTP

A customer asked me a question a little while ago that provided me the opportunity to recycle some code that I had written many years ago. In so doing, I also made a bunch of updates to the code to make it considerably more useful, and I thought that it would make a great blog.

Here's the scenario: a customer had hundreds of user accounts created, and he wanted to use the FTP service's User Isolation features to restrict each user to a specific folder on his FTP site. Since it would take a long time to manually create a folder for each user account, the customer wanted to know if there was a way to automate the process. As it turns out, I had posted a very simple script in the IIS.net forums several years ago that did something like what he wanted; and that script was based off an earlier script that I had written for someone else back in the IIS 6.0 days.

One quick reminder - FTP User Isolation uses a specific set of folders for user accounts, which are listed in the table below.

| User Account Types | Home Directory Syntax |

|---|---|

| Anonymous users | %FtpRoot%\LocalUser\Public |

| Local Windows user accounts

(Requires Basic authentication.) |

%FtpRoot%\LocalUser\%UserName% |

| Windows domain accounts

(Requires Basic authentication.) |

%FtpRoot%\%UserDomain%\%UserName% |

Note: %FtpRoot% is the root directory for your FTP site: for example, C:\Inetpub\Ftproot.

That being said, I'm a big believer in recycling code, so I found the last version of that script that I gave to someone and I made a bunch of changes to it so it would be more useful for the customer. What that in mind, here's the resulting script, and I'll explain a little more about what it does after the code sample.

Option Explicit ' Define the root path for the user isolation folders. ' This should be the root directory for your FTP site. Dim strRootPath : strRootPath = "C:\Inetpub\wwwroot\" ' Define the name of the domain or the computer to use. ' Leave this blank for the local computer. Dim strComputerOrDomain : strComputerOrDomain = "" ' Define the remaining script variables. Dim objFSO, objCollection, objUser, objNetwork, strContainerName ' Create a network object; used to query the computer name. Set objNetwork = WScript.CreateObject("WScript.Network") ' Create a file system object; used to creat folders. Set objFSO = CreateObject("Scripting.FileSystemObject") ' Test if the computer name is null. If Len(strComputerOrDomain)=0 Or strComputerOrDomain="." Then ' If so, define the local computer name as the account repository. strComputerOrDomain = objNetwork.ComputerName End If ' Verify that the root path exists. If objFSO.FolderExists(strRootPath) Then ' Test if the script is using local users. If StrComp(strComputerOrDomain,objNetwork.ComputerName,vbTextCompare)=0 Then ' If so, define the local users container path. strContainerName = "LocalUser" ' And define the users collection as local. Set objCollection = GetObject("WinNT://.") Else ' Otherwise, use the source name as the path. strContainerName = strComputerOrDomain ' And define the users collection as remote. Set objCollection = GetObject("WinNT://" & strComputerOrDomain & "") End If ' Append trailing backslash if necessary. If Right(strRootPath,1)<>"\" Then strRootPath = strRootPath & "\" ' Define the adjusted root path for the container folder. strRootPath = strRootPath & strContainerName & "\" ' Test if the container folder already exists. If objFSO.FolderExists(strRootPath)=False Then ' Create the container folder if necessary. objFSO.CreateFolder(strRootPath) End If ' Specify the collection filter for user objects only. objCollection.Filter = Array("user") ' Loop through the users collection. For Each objUser In objCollection ' Test if the user's account is enabled. If objUser.AccountDisabled = False Then ' Test if the user's folder already exists. If objFSO.FolderExists(strRootPath & "\" & objUser.Name)=False Then ' Create the user's folder if necessary. objFSO.CreateFolder(strRootPath & "\" & objUser.Name) End If End If Next End If

I documented this script in great detail, so it should be self-explanatory for the most part. But just to be on the safe side, here's an explanation of what this script is doing when you run it on your FTP server:

- Defines two user-updatable variables:

- strRootPath - which specifies the physical path to the root of your FTP site.

- strComputerOrDomain - which specifies the computer name or the domain name where your user accounts are located. (Note: You can leave this blank if you are using local user accounts on your FTP server.)

- Creates a few helper objects and determines the local computer name if necessary.

- Checks to see if the physical path to the root of your FTP site actually exists before continuing.

- Creates a connection to the user account store (local or domain).

- Determines the container folder name that be the parent directory of user account folders, and creates it if necessary. (See my earlier note about the folder names.)

- Defines a filter for user objects in the specifies account repository. (This removes computer accounts and such from the operation.)

- Loops through the collection of user accounts, checks each account to see if it is enabled, and creates a folder for each user account if it does not already exist.

That's all for now. ;-]

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

Advanced Log Parser Part 7 - Creating a Generic Input Format Plug-In

28 February 2013 • by Bob • LogParser, Scripting, XML

In Part 6 of this series, I showed how to create a very basic COM-based input format provider for Log Parser. I wrote that blog post as a follow-up to an earlier blog post where I had written a more complex COM-based input format provider for Log Parser that worked with FTP RSCA events. My original blog post had resulted in several requests for me to write some easier examples about how to get started writing COM-based input format providers for Log Parser, and those appeals led me to write my last blog post:

Advanced Log Parser Part 6 - Creating a Simple Custom Input Format Plug-In

The example in that blog post simply returns static data, which was the easiest example that I could demonstrate.

For this follow-up blog post, I will illustrate how to create a simple COM-based input format plug-in for Log Parser that you can use as a generic provider for consuming data in text-based log files. Please bear in mind that this is just an example to help developers get started writing their own COM-based input format providers; you might be able to accomplish some of what I will demonstrate in this blog post by using the built-in Log Parser functionality. That being said, this still seems like the best example to help developers get started because consuming data in text-based log files was the most-often-requested example that I received.

In Review: Creating COM-based plug-ins for Log Parser

In my earlier blog posts, I mentioned that a COM plug-in has to support several public methods. You can look at those blog posts when you get the chance, but it is a worthwhile endeavor for me to copy the following information from those blog posts since it is essential to understanding how the code sample in this blog post is supposed to work.

| Method Name | Description |

|---|---|

| OpenInput | Opens your data source and sets up any initial environment settings. |

| GetFieldCount | Returns the number of fields that your plug-in will provide. |

| GetFieldName | Returns the name of a specified field. |

| GetFieldType | Returns the datatype of a specified field. |

| GetValue | Returns the value of a specified field. |

| ReadRecord | Reads the next record from your data source. |

| CloseInput | Closes your data source and cleans up any environment settings. |

Once you have created and registered a COM-based input format plug-in, you call it from Log Parser by using something like the following syntax:

logparser.exe "SELECT * FROM FOO" -i:COM -iProgID:BAR

In the preceding example, FOO is a data source that makes sense to your plug-in, and BAR is the COM class name for your plug-in.

Creating a Generic COM plug-in for Log Parser

As I have done in my previous two blog posts about creating COM-based input format plug-ins, I'm going to demonstrate how to create a COM component by using a scriptlet since no compilation is required. This generic plug-in will parse any text-based log files where records are delimited by CRLF sequences and fields/columns are delimited by a separator that is defined as a constant in the code sample.

To create the sample COM plug-in, copy the following code into a text file, and save that file as "Generic.LogParser.Scriptlet.sct" to your computer. (Note: The *.SCT file extension tells Windows that this is a scriptlet file.)

<SCRIPTLET> <registration Description="Simple Log Parser Scriptlet" Progid="Generic.LogParser.Scriptlet" Classid="{4e616d65-6f6e-6d65-6973-526f62657274}" Version="1.00" Remotable="False" /> <comment> EXAMPLE: logparser "SELECT * FROM 'C:\foo\bar.log'" -i:COM -iProgID:Generic.LogParser.Scriptlet </comment> <implements id="Automation" type="Automation"> <method name="OpenInput"> <parameter name="strFileName"/> </method> <method name="GetFieldCount" /> <method name="GetFieldName"> <parameter name="intFieldIndex"/> </method> <method name="GetFieldType"> <parameter name="intFieldIndex"/> </method> <method name="ReadRecord" /> <method name="GetValue"> <parameter name="intFieldIndex"/> </method> <method name="CloseInput"> <parameter name="blnAbort"/> </method> </implements> <SCRIPT LANGUAGE="VBScript"> Option Explicit ' Define the column separator in the log file. Const strSeparator = "|" ' Define whether the first row contains column names. Const blnHeaderRow = True ' Define the field type constants. Const TYPE_INTEGER = 1 Const TYPE_REAL = 2 Const TYPE_STRING = 3 Const TYPE_TIMESTAMP = 4 Const TYPE_NULL = 5 ' Declare variables. Dim objFSO, objFile, blnFileOpen Dim arrFieldNames, arrFieldTypes Dim arrCurrentRecord ' Indicate that no file has been opened. blnFileOpen = False ' -------------------------------------------------------------------------------- ' Open the input session. ' -------------------------------------------------------------------------------- Public Function OpenInput(strFileName) Dim tmpCount ' Test for a file name. If Len(strFileName)=0 Then ' Return a status that the parameter is incorrect. OpenInput = 87 blnFileOpen = False Else ' Test for single-quotes. If Left(strFileName,1)="'" And Right(strFileName,1)="'" Then ' Strip the single-quotes from the file name. strFileName = Mid(strFileName,2,Len(strFileName)-2) End If ' Open the file system object. Set objFSO = CreateObject("Scripting.Filesystemobject") ' Verify that the specified file exists. If objFSO.FileExists(strFileName) Then ' Open the specified file. Set objFile = objFSO.OpenTextFile(strFileName,1,False) ' Set a flag to indicate that the specified file is open. blnFileOpen = true ' Retrieve an initial record. Call ReadRecord() ' Redimension the array of field names. ReDim arrFieldNames(UBound(arrCurrentRecord)) ' Loop through the record fields. For tmpCount = 0 To (UBound(arrFieldNames)) ' Test for a header row. If blnHeaderRow = True Then arrFieldNames(tmpCount) = arrCurrentRecord(tmpCount) Else arrFieldNames(tmpCount) = "Field" & (tmpCount+1) End If Next ' Test for a header row. If blnHeaderRow = True Then ' Retrieve a second record. Call ReadRecord() End If ' Redimension the array of field types. ReDim arrFieldTypes(UBound(arrCurrentRecord)) ' Loop through the record fields. For tmpCount = 0 To (UBound(arrFieldTypes)) ' Test if the current field contains a date. If IsDate(arrCurrentRecord(tmpCount)) Then ' Specify the field type as a timestamp. arrFieldTypes(tmpCount) = TYPE_TIMESTAMP ' Test if the current field contains a number. ElseIf IsNumeric(arrCurrentRecord(tmpCount)) Then ' Test if the current field contains a decimal. If InStr(arrCurrentRecord(tmpCount),".") Then ' Specify the field type as a real number. arrFieldTypes(tmpCount) = TYPE_REAL Else ' Specify the field type as an integer. arrFieldTypes(tmpCount) = TYPE_INTEGER End If ' Test if the current field is null. ElseIf IsNull(arrCurrentRecord(tmpCount)) Then ' Specify the field type as NULL. arrFieldTypes(tmpCount) = TYPE_NULL ' Test if the current field is empty. ElseIf IsEmpty(arrCurrentRecord(tmpCount)) Then ' Specify the field type as NULL. arrFieldTypes(tmpCount) = TYPE_NULL ' Otherwise, assume it's a string. Else ' Specify the field type as a string. arrFieldTypes(tmpCount) = TYPE_STRING End If Next ' Temporarily close the log file. objFile.Close ' Re-open the specified file. Set objFile = objFSO.OpenTextFile(strFileName,1,False) ' Test for a header row. If blnHeaderRow = True Then ' Skip the first row. objFile.SkipLine End If ' Return success status. OpenInput = 0 Else ' Return a file not found status. OpenInput = 2 End If End If End Function ' -------------------------------------------------------------------------------- ' Close the input session. ' -------------------------------------------------------------------------------- Public Function CloseInput(blnAbort) ' Free the objects. Set objFile = Nothing Set objFSO = Nothing ' Set a flag to indicate that the specified file is closed. blnFileOpen = False End Function ' -------------------------------------------------------------------------------- ' Return the count of fields. ' -------------------------------------------------------------------------------- Public Function GetFieldCount() ' Specify the default value. GetFieldCount = 0 ' Test if a file is open. If (blnFileOpen = True) Then ' Test for the number of field names. If UBound(arrFieldNames) > 0 Then ' Return the count of fields. GetFieldCount = UBound(arrFieldNames) + 1 End If End If End Function ' -------------------------------------------------------------------------------- ' Return the specified field's name. ' -------------------------------------------------------------------------------- Public Function GetFieldName(intFieldIndex) ' Specify the default value. GetFieldName = Null ' Test if a file is open. If (blnFileOpen = True) Then ' Test if the index is valid. If intFieldIndex<=UBound(arrFieldNames) Then ' Return the specified field name. GetFieldName = arrFieldNames(intFieldIndex) End If End If End Function ' -------------------------------------------------------------------------------- ' Return the specified field's type. ' -------------------------------------------------------------------------------- Public Function GetFieldType(intFieldIndex) ' Specify the default value. GetFieldType = Null ' Test if a file is open. If (blnFileOpen = True) Then ' Test if the index is valid. If intFieldIndex<=UBound(arrFieldTypes) Then ' Return the specified field type. GetFieldType = arrFieldTypes(intFieldIndex) End If End If End Function ' -------------------------------------------------------------------------------- ' Return the specified field's value. ' -------------------------------------------------------------------------------- Public Function GetValue(intFieldIndex) ' Specify the default value. GetValue = Null ' Test if a file is open. If (blnFileOpen = True) Then ' Test if the index is valid. If intFieldIndex<=UBound(arrCurrentRecord) Then ' Return the specified field value based on the field type. Select Case arrFieldTypes(intFieldIndex) Case TYPE_INTEGER: GetValue = CInt(arrCurrentRecord(intFieldIndex)) Case TYPE_REAL: GetValue = CDbl(arrCurrentRecord(intFieldIndex)) Case TYPE_STRING: GetValue = CStr(arrCurrentRecord(intFieldIndex)) Case TYPE_TIMESTAMP: GetValue = CDate(arrCurrentRecord(intFieldIndex)) Case Else GetValue = Null End Select End If End If End Function ' -------------------------------------------------------------------------------- ' Read the next record, and return true or false if there is more data. ' -------------------------------------------------------------------------------- Public Function ReadRecord() ' Specify the default value. ReadRecord = False ' Test if a file is open. If (blnFileOpen = True) Then ' Test if there is more data. If objFile.AtEndOfStream Then ' Flag the log file as having no more data. ReadRecord = False Else ' Read the current record. arrCurrentRecord = Split(objFile.ReadLine,strSeparator) ' Flag the log file as having more data to process. ReadRecord = True End If End If End Function </SCRIPT> </SCRIPTLET>

After you have saved the scriptlet code to your computer, you register it by using the following syntax:

regsvr32 Generic.LogParser.Scriptlet.sct

At the very minimum, you can now use the COM plug-in with Log Parser by using syntax like the following:

logparser "SELECT * FROM 'C:\Foo\Bar.log'" -i:COM -iProgID:Generic.LogParser.Scriptlet

Next, let's analyze what this sample does.

Examining the Generic Scriptlet in Detail

Here are the different parts of the scriptlet and what they do:

- The <registration> section of the scriptlet sets up the COM registration information; you'll notice the COM component class name and GUID, as well as version information and a general description. (Note that you should generate your own GUID for each scriptlet that you create.)

- The <implements> section declares the public methods that the COM plug-in has to support.

- The <script>section contains the actual implementation:

- The first part of the script section declares the global variables that will be used:

- The strSeparator constant defines the delimiter that is used to separate the data between fields/columns in a text-based log file.

- The blnHeaderRow constant defines whether the first row in a text-based log file contains the names of the fields/columns:

- If set to True, the plug-in will use the data in the first line of the log file to name the fields/columns.

- If set to False, the plug-in will define generic field/column names like "Field1", "Field2", etc.

- The second part of the script contains the required methods:

- The OpenInput() method performs several tasks:

- Locates and opens the log file that you specify in your SQL statement, or returns an error if the log file cannot be found.

- Determines the number, names, and data types of fields/columns in the log file.

- The CloseInput() method cleans up the session by closing the log file and destroying objects.

- The GetFieldCount() method returns the number of fields/columns in the log file.

- The GetFieldName() method returns the name of a field/column in the log file.

- The GetFieldType() method returns the data type of a field/column in the log file. As a reminder, Log Parser supports the following five data types for COM plug-ins: TYPE_INTEGER, TYPE_REAL, TYPE_STRING, TYPE_TIMESTAMP, and TYPE_NULL.

- The GetValue() method returns the data value of a field/column in the log file.

- The ReadRecord() method moves to the next line in the log file. This method returns True if there is additional data to read, or False when the end of data is reached.

- The OpenInput() method performs several tasks:

- The first part of the script section declares the global variables that will be used:

Next, let's look at how to use the sample.

Using the Generic Scriptlet with Log Parser

As a sample log file for this blog, I'm going to use the data in the Sample XML File (books.xml) from MSDN. By running a quick Log Parser query that I will show later, I was able to export data from the XML file into text file named "books.log" that represents an example of a simple log file format that I have had to work with in the past:

id|publish_date|author|title|price

bk101|2000-10-01|Gambardella, Matthew|XML Developer's Guide|44.950000

bk102|2000-12-16|Ralls, Kim|Midnight Rain|5.950000

bk103|2000-11-17|Corets, Eva|Maeve Ascendant|5.950000

bk104|2001-03-10|Corets, Eva|Oberon's Legacy|5.950000

bk105|2001-09-10|Corets, Eva|The Sundered Grail|5.950000

bk106|2000-09-02|Randall, Cynthia|Lover Birds|4.950000

bk107|2000-11-02|Thurman, Paula|Splish Splash|4.950000

bk108|2000-12-06|Knorr, Stefan|Creepy Crawlies|4.950000

bk109|2000-11-02|Kress, Peter|Paradox Lost|6.950000

bk110|2000-12-09|O'Brien, Tim|Microsoft .NET: The Programming Bible|36.950000

bk111|2000-12-01|O'Brien, Tim|MSXML3: A Comprehensive Guide|36.950000

bk112|2001-04-16|Galos, Mike|Visual Studio 7: A Comprehensive Guide|49.950000

In this example, the data is pretty easy to understand - the first row contains the list of field/column names, and the fields/columns are separated by the pipe ("|") character throughout the log file. That being said, you could easily change my sample code to use a different delimiter that your custom log files use.

With that in mind, let's look at some Log Parser examples.

Example #1: Retrieving Data from a Custom Log

The first thing that you should try is to simply retrieve data from your custom plug-in, and the following query should serve as an example:

logparser "SELECT * FROM 'C:\sample\books.log'" -i:COM -iProgID:Generic.LogParser.Scriptlet

The above query will return results like the following:

| id | publish_date | author | title | price | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ----- | ------------------ | -------------------- | ------------------------------------- | --------- | ||||||||||

| bk101 | 10/1/2000 0:00:00 | Gambardella, Matthew | XML Developer's Guide | 44.950000 | ||||||||||

| bk102 | 12/16/2000 0:00:00 | Ralls, Kim | Midnight Rain | 5.950000 | ||||||||||

| bk103 | 11/17/2000 0:00:00 | Corets, Eva | Maeve Ascendant | 5.950000 | ||||||||||

| bk104 | 3/10/2001 0:00:00 | Corets, Eva | Oberon's Legacy | 5.950000 | ||||||||||

| bk105 | 9/10/2001 0:00:00 | Corets, Eva | The Sundered Grail | 5.950000 | ||||||||||

| bk106 | 9/2/2000 0:00:00 | Randall, Cynthia | Lover Birds | 4.950000 | ||||||||||

| bk107 | 11/2/2000 0:00:00 | Thurman, Paula | Splish Splash | 4.950000 | ||||||||||

| bk108 | 12/6/2000 0:00:00 | Knorr, Stefan | Creepy Crawlies | 4.950000 | ||||||||||

| bk109 | 11/2/2000 0:00:00 | Kress, Peter | Paradox Lost | 6.950000 | ||||||||||

| bk110 | 12/9/2000 0:00:00 | O'Brien, Tim | Microsoft .NET: The Programming Bible | 36.950000 | ||||||||||

| bk111 | 12/1/2000 0:00:00 | O'Brien, Tim | MSXML3: A Comprehensive Guide | 36.950000 | ||||||||||

| bk112 | 4/16/2001 0:00:00 | Galos, Mike | Visual Studio 7: A Comprehensive Guide | 49.950000 | ||||||||||

|

||||||||||||||

While the above example works a good proof-of-concept for functionality, it's not overly useful, so let's look at additional examples.

Example #2: Reformatting Log File Data

Once you have established that you can retrieve data from your custom plug-in, you can start taking advantage of Log Parser's features to process your log file data. In this example, I will use several of the built-in functions to reformat the data:

logparser "SELECT id AS ID, TO_DATE(publish_date) AS Date, author AS Author, SUBSTR(title,0,20) AS Title, STRCAT(TO_STRING(TO_INT(FLOOR(price))),SUBSTR(TO_STRING(price),INDEX_OF(TO_STRING(price),'.'),3)) AS Price FROM 'C:\sample\books.log'" -i:COM -iProgID:Generic.LogParser.Scriptlet

The above query will return results like the following:

| ID | Date | Author | Title | Price | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ----- | ---------- | -------------------- | -------------------- | ----- | ||||||||||

| bk101 | 10/1/2000 | Gambardella, Matthew | XML Developer's Guid | 44.95 | ||||||||||

| bk102 | 12/16/2000 | Ralls, Kim | Midnight Rain | 5.95 | ||||||||||

| bk103 | 11/17/2000 | Corets, Eva | Maeve Ascendant | 5.95 | ||||||||||

| bk104 | 3/10/2001 | Corets, Eva | Oberon's Legacy | 5.95 | ||||||||||

| bk105 | 9/10/2001 | Corets, Eva | The Sundered Grail | 5.95 | ||||||||||

| bk106 | 9/2/2000 | Randall, Cynthia | Lover Birds | 4.95 | ||||||||||

| bk107 | 11/2/2000 | Thurman, Paula | Splish Splash | 4.95 | ||||||||||

| bk108 | 12/6/2000 | Knorr, Stefan | Creepy Crawlies | 4.95 | ||||||||||

| bk109 | 11/2/2000 | Kress, Peter | Paradox Lost | 6.95 | ||||||||||

| bk110 | 12/9/2000 | O'Brien, Tim | Microsoft .NET: The | 36.95 | ||||||||||

| bk111 | 12/1/2000 | O'Brien, Tim | MSXML3: A Comprehens | 36.95 | ||||||||||

| bk112 | 4/16/2001 | Galos, Mike | Visual Studio 7: A C | 49.95 | ||||||||||

|

||||||||||||||

This example reformats the dates and prices a little nicer, and it truncates the book titles at 20 characters so they fit a little better on some screens.

Example #3: Processing Log File Data

In addition to simply reformatting your data, you can use Log Parser to group, sort, count, total, etc., your data. The following example illustrates how to use Log Parser to count the number of books by author in the log file:

logparser "SELECT author AS Author, COUNT(Title) AS Books FROM 'C:\sample\books.log' GROUP BY Author ORDER BY Author" -i:COM -iProgID:Generic.LogParser.Scriptlet

The above query will return results like the following:

| Author | Books | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| -------------------- | ----- | ||||||||||

| Corets, Eva | 3 | ||||||||||

| Galos, Mike | 1 | ||||||||||

| Gambardella, Matthew | 1 | ||||||||||

| Knorr, Stefan | 1 | ||||||||||

| Kress, Peter | 1 | ||||||||||

| O'Brien, Tim | 2 | ||||||||||

| Ralls, Kim | 1 | ||||||||||

| Randall, Cynthia | 1 | ||||||||||

| Thurman, Paula | 1 | ||||||||||

|

|||||||||||

The results are pretty straight-forward: Log Parser parses the data and presents you with a list of alphabetized authors and the total number of books that were written by each author.

Example #4: Creating Charts

You can also use data from your custom log file to create charts through Log Parser. If I modify the above example, all that I need to do is add a few parameters to create a chart:

logparser "SELECT author AS Author, COUNT(Title) AS Books INTO Authors.gif FROM 'C:\sample\books.log' GROUP BY Author ORDER BY Author" -i:COM -iProgID:Generic.LogParser.Scriptlet -fileType:GIF -groupSize:800x600 -chartType:Pie -categories:OFF -values:ON -legend:ON

The above query will create a chart like the following:

I admit that it's not a very pretty-looking chart - you can look at the other posts in my Log Parser series for some examples about making Log Parser charts more interesting.

Summary

In this blog post and my last post, I have illustrated a few examples that should help developers get started writing their own custom input format plug-ins for Log Parser. As I mentioned in each of the blog posts where I have used scriptlets for the COM objects, I would typically use C# or C++ to create a COM component, but using a scriptlet is much easier for demos because it doesn't require installing Visual Studio and compiling a DLL.

There is one last thing that I would like to mention before I finish this blog; I mentioned earlier that I had used Log Parser to reformat the sample Books.xml file into a generic log file that I could use for the examples in this blog. Since Log Parser supports XML as an input format and it allows you to customize your output, I wrote the following simple Log Parser query to reformat the XML data into a format that I had often seen used for text-based log files:

logparser.exe "SELECT id,publish_date,author,title,price INTO books.log FROM books.xml" -i:xml -o:tsv -headers:ON -oSeparator:"|"

Actually, this ability to change data formats is one of the hidden gems of Log Parser; I have often used Log Parser to change the data from one type of log file to another - usually so that a different program can access the data. For example, if you were given the log file with a pipe ("|") delimiter like I used as an example, you could easily use Log Parser to convert that data into the CSV format so you could open it in Excel:

logparser.exe "SELECT id,publish_date,author,title,price INTO books.csv FROM books.log" -i:tsv -o:csv -headers:ON -iSeparator:"|" -oDQuotes:on

I hope these past few blog posts help you to get started writing your own custom input format plug-ins for Log Parser.

That's all for now. ;-)

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

Advanced Log Parser Part 6 - Creating a Simple Custom Input Format Plug-In

27 February 2013 • by Bob • LogParser, Scripting

In Part 4 of this series, I illustrated how to create a new COM-based input provider for Log Parser from a custom input format:

Advanced Log Parser Charts Part 4 - Adding Custom Input Formats

For the sample that I published in that blog, I wrote a plug-in that consumed FTP RSCA events, which is highly structured data, and it added a lot of complexity to my example. In the past ten months or so since I published my original blog, I've had several requests for additional information about how to get started writing COM-based input formats for Log Parser, so it occurred to me that perhaps I could have shown a simpler example to get people started instead of diving straight into parsing RSCA data. ;-)

With that in mind, I thought that I would write a couple of blog posts with simpler examples to help anyone who wants to get started writing custom input formats for Log Parser.

For this blog post, I will show you how to create a very basic COM-based input format provider for Log Parser that simply returns static data; you could use this sample as a template to quickly get up-and-running with the basic concepts. (I promise to follow this blog with another real-world example that is still easier-to-use than my RSCA example.)

A Reminder about Creating COM-based plug-ins for Log Parser

In the blog that I referred to earlier, I mentioned that a COM plug-in has to support the following public methods:

| Method Name | Description |

|---|---|

OpenInput |

Opens your data source and sets up any initial environment settings. |

GetFieldCount |

Returns the number of fields that your plug-in will provide. |

GetFieldName |

Returns the name of a specified field. |

GetFieldType |

Returns the datatype of a specified field. |

GetValue |

Returns the value of a specified field. |

ReadRecord |

Reads the next record from your data source. |

CloseInput |

Closes your data source and cleans up any environment settings. |

Once you have created and registered a COM plug-in, you call it by using something like the following syntax:

logparser.exe "SELECT * FROM FOO" -i:COM -iProgID:BAR

In the preceding example, FOO is a data source that makes sense to your plug-in, and BAR is the COM class name for your plug-in.

Creating a Simple COM plug-in for Log Parser

Once again, I'm going to demonstrate how to create a COM component by using a scriptlet, which I like to use for demos because they are quick to design, they're easily portable, and updates take place immediately since no compilation is required. (All of that being said, if I were writing a real COM plug-in for Log Parser, I would use C# or C++.)

To create the sample COM plug-in, copy the following code into a text file, and save that file as "Simple.LogParser.Scriptlet.sct" to your computer. (Note: The *.SCT file extension tells Windows that this is a scriptlet file.)

<SCRIPTLET> <registration Description="Simple Log Parser Scriptlet" Progid="Simple.LogParser.Scriptlet" Classid="{4e616d65-6f6e-6d65-6973-526f62657274}" Version="1.00" Remotable="False" /> <comment> EXAMPLE: logparser "SELECT * FROM FOOBAR" -i:COM -iProgID:Simple.LogParser.Scriptlet </comment> <implements id="Automation" type="Automation"> <method name="OpenInput"> <parameter name="strValue"/> </method> <method name="GetFieldCount" /> <method name="GetFieldName"> <parameter name="intFieldIndex"/> </method> <method name="GetFieldType"> <parameter name="intFieldIndex"/> </method> <method name="ReadRecord" /> <method name="GetValue"> <parameter name="intFieldIndex"/> </method> <method name="CloseInput"> <parameter name="blnAbort"/> </method> </implements> <SCRIPT LANGUAGE="VBScript"> Option Explicit Const MAX_RECORDS = 5 Dim intRecordCount ' -------------------------------------------------------------------------------- ' Open the input session. ' -------------------------------------------------------------------------------- Public Function OpenInput(strValue) intRecordCount = 0 End Function ' -------------------------------------------------------------------------------- ' Close the input session. ' -------------------------------------------------------------------------------- Public Function CloseInput(blnAbort) End Function ' -------------------------------------------------------------------------------- ' Return the count of fields. ' -------------------------------------------------------------------------------- Public Function GetFieldCount() GetFieldCount = 5 End Function ' -------------------------------------------------------------------------------- ' Return the specified field's name. ' -------------------------------------------------------------------------------- Public Function GetFieldName(intFieldIndex) Select Case CInt(intFieldIndex) Case 0: GetFieldName = "INTEGER" Case 1: GetFieldName = "REAL" Case 2: GetFieldName = "STRING" Case 3: GetFieldName = "TIMESTAMP" Case 4: GetFieldName = "NULL" Case Else GetFieldName = Null End Select End Function ' -------------------------------------------------------------------------------- ' Return the specified field's type. ' -------------------------------------------------------------------------------- Public Function GetFieldType(intFieldIndex) ' Define the field type constants. Const TYPE_INTEGER = 1 Const TYPE_REAL = 2 Const TYPE_STRING = 3 Const TYPE_TIMESTAMP = 4 Const TYPE_NULL = 5 Select Case CInt(intFieldIndex) Case 0: GetFieldType = TYPE_INTEGER Case 1: GetFieldType = TYPE_REAL Case 2: GetFieldType = TYPE_STRING Case 3: GetFieldType = TYPE_TIMESTAMP Case 4: GetFieldType = TYPE_NULL Case Else GetFieldType = Null End Select End Function ' -------------------------------------------------------------------------------- ' Return the specified field's value. ' -------------------------------------------------------------------------------- Public Function GetValue(intFieldIndex) Select Case CInt(intFieldIndex) Case 0: GetValue = 1 Case 1: GetValue = 1.0 Case 2: GetValue = "One" Case 3: GetValue = Now Case Else GetValue = Null End Select End Function ' -------------------------------------------------------------------------------- ' Read the next record, and return true or false if there is more data. ' -------------------------------------------------------------------------------- Public Function ReadRecord() intRecordCount = intRecordCount + 1 If intRecordCount <= MAX_RECORDS Then ReadRecord = True Else ReadRecord = False End If End Function </SCRIPT> </SCRIPTLET>

After you have saved the scriptlet code to your computer, you register it by using the following syntax:

regsvr32 Simple.LogParser.Scriptlet.sct

At the very minimum, you can now use the COM plug-in with Log Parser by using syntax like the following:

logparser "SELECT * FROM FOOBAR" -i:COM -iProgID:Simple.LogParser.Scriptlet

This will return results like the following:

INTEGER | REAL | STRING | TIMESTAMP | NULL |

|---|---|---|---|---|

------- | -------- | ------ | ------------------ | ---- |

1 |

1.000000 |

One |

2/26/2013 19:42:12 |

- |

1 |

1.000000 |

One |

2/26/2013 19:42:12 |

- |

1 |

1.000000 |

One |

2/26/2013 19:42:12 |

- |

1 |

1.000000 |

One |

2/26/2013 19:42:12 |

- |

1 |

1.000000 |

One |

2/26/2013 19:42:12 |

- |

Statistics: |

||||

----------- |

||||

Elements processed: |

5 |

|||

Elements output: |

5 |

|||

Execution time: |

0.01 seconds |

Next, let's analyze what this sample does.

Examining the Sample Scriptlet Contents in Detail

Here are the different parts of the scriptlet and what they do:

- The <registration> section of the scriptlet sets up the COM registration information; you'll notice the COM component class name and GUID, as well as version information and a general description. (Note that you should generate your own GUID for each scriptlet that you create.)

- The <implements> section declares the public methods that the COM plug-in has to support.

- The <script>section contains the actual implementation:

- The

OpenInput()method opens your data source, although in this example it only initializes the record count. (Note that the value that is passed to the method will be ignored in this example.) - The

CloseInput()method would normally clean up your session, (e.g. close a data file or database, etc.), but it doesn't do anything in this example. - The

GetFieldCount()method returns the number of data fields in each record of your data, which is static in this example. - The

GetFieldName()method returns the name of a field that is passed to the method as a number; the names are static in this example. - The

GetFieldType()method returns the data type of a field that is passed to the method as a number, which are statically-defined in this example. As a reminder, Log Parser supports the following five data types for COM plug-ins:TYPE_INTEGER,TYPE_REAL,TYPE_STRING,TYPE_TIMESTAMP, andTYPE_NULL. - The

GetValue()method returns the data value of a field that is passed to the method as a number. Once again, the data values are statically-defined in this example. - The

ReadRecord()method moves to the next record in your data set; this method returns True if there is data to read, or False when the end of data is reached. In this example, the method increments the record counter and sets the status based on whether the maximum number of records has been reached.

- The

Summary

That wraps up the simplest example that I could put together of a COM-based input provider for Log Parser. In my next blog, I'll show how to create a generic COM-based input provider for Log Parser that you can use to parse text-based log files.

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

Replacing the Windows 8 Start Menu

13 February 2013 • by Bob • Windows

As most people who have installed Windows 8 have realized by now, this new version of Windows is missing something... something very important: a real Start Menu. In their efforts to make Windows more

This design was so clunky and so confusing for users that it resulted in the following actual advertisement outside a local computer repair shop:

The Windows 8 user experience was so bad that none of the older members of my family were able to use it, so I set out to find a replacement for the missing start menu; something which would make Windows 8 look and feel like using Windows 7. With that in mind, I tried out several Windows 8 Start Menu applications with mixed results. I did all of my testing on a desktop version of Windows 8, but all of these will work on the Microsoft Surface Pro tablet, although they will not on the original ARM-based Microsoft Surface tablet. (See my notes below about that.)

All that being said, here are some of the better Start Menu replacements that I tested:

Start8:

- URL: http://www.stardock.com/products/start8/

- Pricing: $4.99

- Rating: GREAT

- Feedback: I really liked this start menu; it worked well and it had lots of options - not as many options as some of the menus for which I only gave a GOOD rating, but it was still pretty darn cool. Once you install this start menu system and have it boot into desktop mode, Windows 8 is almost exactly like using Windows 7. (Note that you can buy a license for this application that is bundled together with their ModernMix application which allows you to run Windows Store applications in a window.)

Classic Shell:

- URL: http://www.classicshell.net/

- Pricing: FREE

- Rating: GOOD

- Feedback: This start menu has lots of configurable options so it's very customizable, but its "Windows 7" start menu is basically the same as its Windows XP start menu with a Windows 7 theme, whereas Start8's Windows 7 start menu is the actual menu style that you expect. That said, since it's open-source you could modify it yourself. ;-)

Start Menu X aka Start Button 8:

- URL: http://www.startmenux.com/ or http://www.startbutton8.com/

- Pricing: FREE, although there is a pro version for $19.99

- Rating: GOOD

- Feedback: This start menu has a smattering of options, and it is definitely its own beast in terms of what you get for a start menu. But that being said, it does give you a start menu, just not one that you are used to or expecting.

Classic Start 8:

- URL: http://www.classicstart8.com/

- Pricing: FREE

- Rating: ACCEPTABLE

- Feedback: This start menu has no configurable options, so it cannot be customized. But that being said, its start menu is basically the same as a "Windows 7" start menu. Still, if you need a great freeware approach to getting the start menu back, you can't beat this.

- UPDATE: This start menu also adds some spamware links to the start menu, so I'm not a big fan of this offering.

RetroUI:

- URL: http://retroui.com/

- Pricing: Starts at $4.95 for 1 Consumer Activation and goes up from there

- Rating: TERRIBLE

- Feedback: I did not like this start menu at all - it was cumbersome and confusing and it looked awful. (They were trying to go with a Metro-styled start menu, and it just didn't work).

By the way, I wrote two companies that make Start Menus for Windows 8, and neither will make their product available for Windows 8 RT; they say that the sandboxing features in Windows RT prevent a start menu replacement from working properly. So if you have an original Microsoft Surface RT tablet, not the Microsoft Surface Pro, you're out of luck. :-(

FWIW - here are some URLs that I looked at with discussions about this topic:

FTP Clients - Part 12: BitKinex

31 January 2013 • by Bob • FTP

For this installment in my series about FTP clients, I want to take a look at BitKinex 3, which is an FTP client from Barad-Dur, LLC. For this blog I used BitKinex 3.2.3, and it is available from the following URL:

At the time of this blog post, BitKinex 3 is available for free, and it contains a bunch of features that make it an appealing FTP and WebDAV client.

|

| Fig. 1 - The Help/About dialog in BitKinex 3. |

BitKinex 3 Overview

When you open BitKinex 3, it shows four connection types (which it refers to as Data Sources): FTP, HTTP/WebDAV, SFTP/SSH, and My Computer. The main interface is analogous to what you would expect in a Site Manager with other FTP clients - you can define new data sources (connections) to FTP sites and websites:

|

| Fig. 2 - The main BitKinex 3 window. |

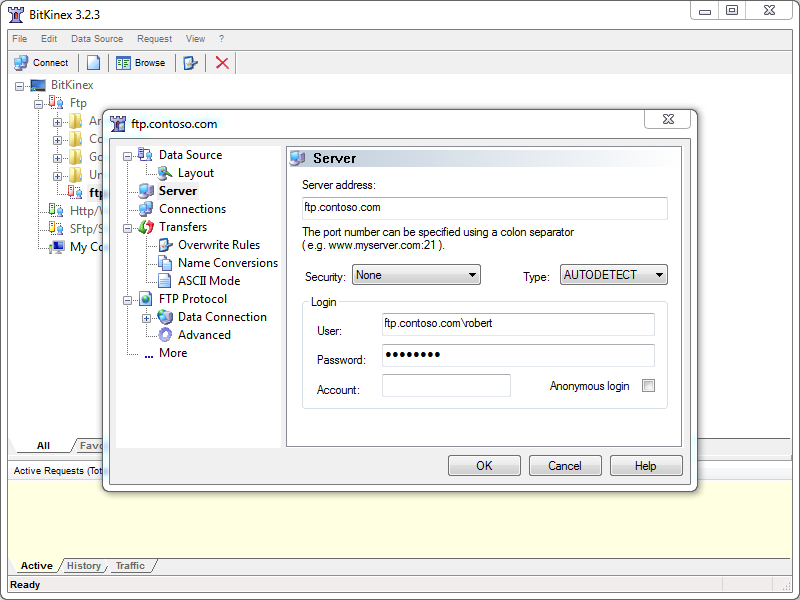

Creating an FTP data source is pretty straight-forward, and there are a fair number of options that you can specify. What's more, data sources can have individual options specified, or they can inherit from a parent note.

|

| Fig. 3 - Creating a new FTP data source. |

|

| Fig. 4 - Specifying the options for an FTP data source. |

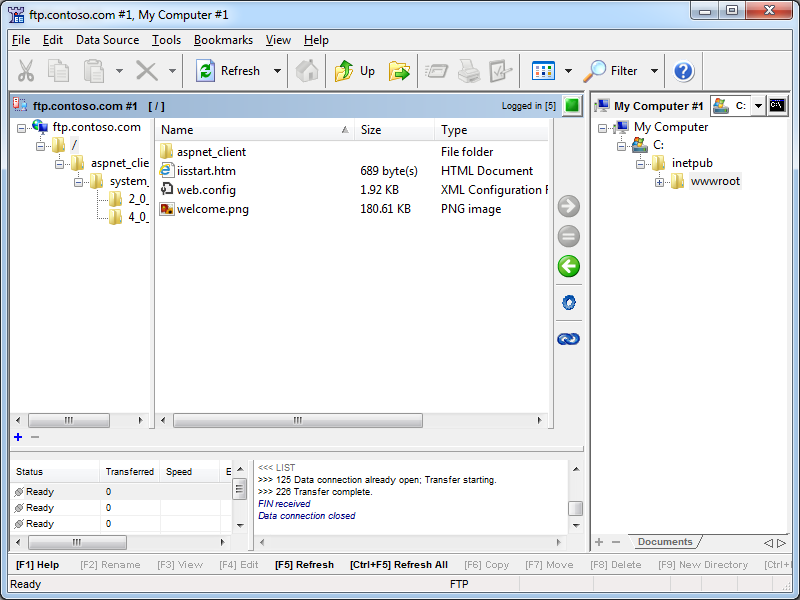

Once a data source has connected, a child window will open and display the folder trees for your local and remote content. (Note: there are several options for customizing how each data source can be displayed.)

|

| Fig. 5 - An open FTP data source. |

BitKinex 3 has support for command-line automation, which is pretty handy if you do a lot of scripting like I do. Documentation about automating BitKinex 3 from the command line is available on the BitKinex website at the following URL:

BitKinex Command Line Interface

That being said, the documentation is a bit sparse and there are few examples, so I didn't attempt anything ambitious from a command line during my testing.

Using BitKinex 3 with FTP over SSL (FTPS)

BitKinex 3 has built-in support for FTP over SSL (FTPS) supports both Explicit and Implicit FTPS. To specify the FTPS mode, you need to choose the correct mode from the Security drop-down menu for your FTP data source.

|

| Fig. 6 - Specifying the FTPS mode. |

Once you have established an FTPS connection through BitKinex 3, the user experience is the same as it is for a standard FTP connection.

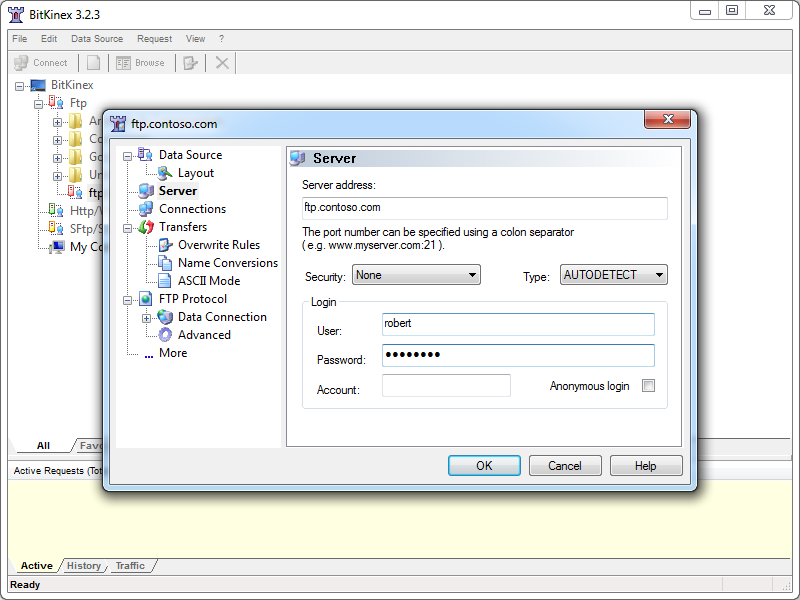

Using Using BitKinex 3 with True FTP Hosts

True FTP hosts are not supported natively, and even though BitKinex 3 allows you to send a custom command after a data source has been opened, I could not find a way to send a custom command before sending user credentials, so true FTP hosts cannot be used.

Using Using BitKinex 3 with Virtual FTP Hosts

BitKinex 3's login settings allow you to specify the virtual host name as part of the user credentials by using syntax like "ftp.example.com|username" or "ftp.example.com\username", so you can use virtual FTP hosts with BitKinex 3.

|

| Fig. 7 - Specifying an FTP virtual host. |

Scorecard for BitKinex 3

This concludes my quick look at a few of the FTP features that are available with BitKinex 3, and here are the scorecard results:

| Client Name | Directory Browsing | Explicit FTPS | Implicit FTPS | Virtual Hosts | True HOSTs | Site Manager | Extensibility |

|---|---|---|---|---|---|---|---|

| BitKinex 3.2.3 | Rich | Y | Y | Y | N | Y | N/A |

| Note: I could not find anyway to extend the functionality of BitKinex 3; but as I mentioned earlier, it does support command-line automation. |

|||||||

That wraps it up this blog - BitKinex 3 is pretty cool FTP client with a lot of options, and I think that my next plan of action is to try out the WebDAV features that are available in BitKinex 3. ;-)

Note: This blog was originally posted at http://blogs.msdn.com/robert_mcmurray/

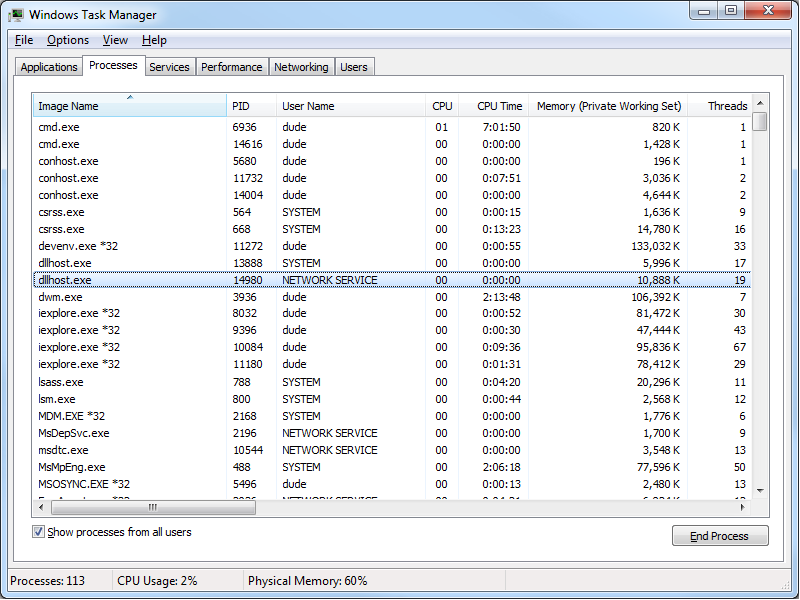

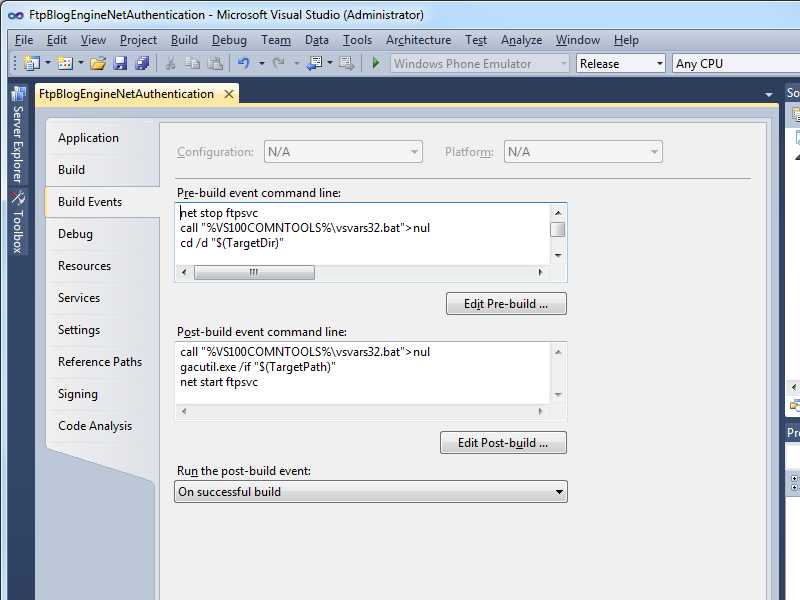

Restarting the FTP Service Orphans a DLLHOST.EXE Process

31 January 2013 • by Bob • Scripting, FTP, Extensibility

I was recently creating a new authentication provider using FTP extensibility, and I ran into a weird behavior that I had seen before. With that in mind, I thought my situation would make a great blog subject because someone else may run into it.

Here are the details of the situation: let's say that you are developing a new FTP provider for IIS, and your code changes never seem to take effect. Your provider appears to be working, it's just that any new functionality is not reflected in your provider's behavior. You restart the FTP service as a troubleshooting step, but that does not appear to make any difference.